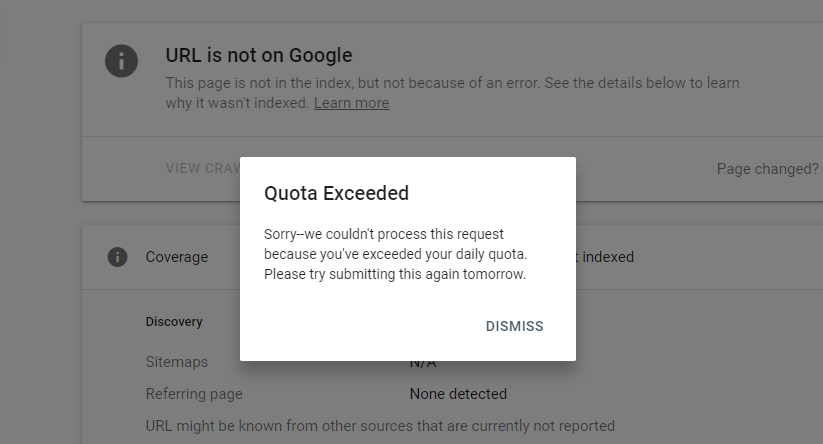

The quick answer to this question is between 10-12 URLs per 24 hours. After you hit your quota you will be met with this alert

“Request Indexing” Returns on December 22, 2020

After months of the “request indexing” tool being unavailable, the feature returned right before the end of 2020. Many SEOs praised its return but that was about it, SEOs went on about their day. We didn’t hear much about how the feature changed or any limitations it might now have.

I had the perfect website to put it to the test… hence this very unscientific case study.

We’re glad to announce that ‘Request Indexing’ is back to the Google Search Console URL Inspection – just in time for the new year! 🎆

Read more about how to use this feature in our Help Center 🔧 https://t.co/m1KD0do5Oi pic.twitter.com/Mh0q2ShoYa— Google Search Central (@googlesearchc) December 22, 2020

Stress Testing The Request Indexing Feature

One of my clients launched a website going from a few thousand pages to 500,000+ pages overnight. The team was fully aware that it would be a shock to Google’s system and we continue to be patient for Google to crawl, discover, and index all of this content. The good (not great) news is that most of our site is sitting in the “discovered – currently not indexed” bucket. Google defines these pages as falling into:

Discovered – currently not indexed: The page was found by Google, but not crawled yet. Typically, Google tried to crawl the URL but the site was overloaded; therefore Google had to reschedule the crawl. This is why the last crawl date is empty on the report.

As mentioned before, this website was re-launched and the massive size differential is likely overloading Google. Over time we continue to see this “discovered – currently not indexed” number decrease and our pages indexed increase.

However, now that the “request indexing” tool returned, it seemed like a good time to spot submit some critical pages directly to Google. Patience is indeed key with this website but it was time to stress test this tool.

How Many URLs Can You Now Submit?

Prior to the tool being deactivated, many could submit up to 50 URLs per day. After a few consecutive days of maxing out my submissions, I wasn’t able to submit more than 12 URLs in a 24 hour period. The error message, once you reached your quote will specify “Please try submitting this [URL] again tomorrow”. However, I found that I couldn’t submit any additional URLs until 24 hours later.

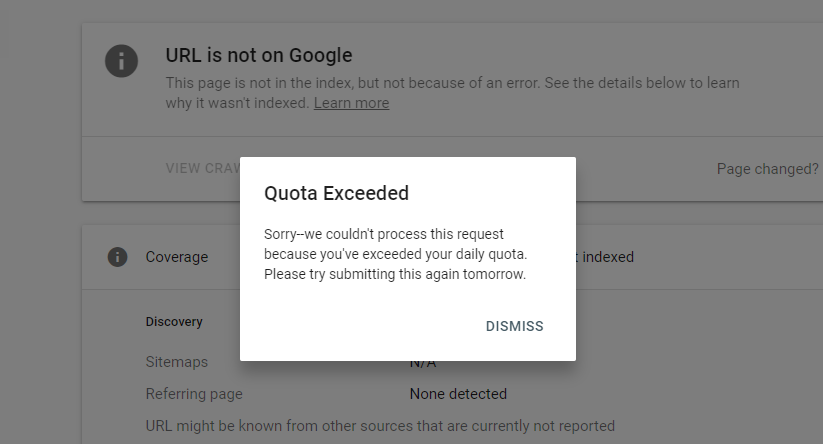

Is Google Attempting To Prevent Automation?

On the second day of my test, I submitted my 11th URL to GSC and hit the “request indexing” button. This is when I was given a CAPTCHA. I’ve never once seen this in the previous version of the request indexing tool.

Interestingly, after clicking through the CAPTCHA, that was the last URL I was able to submit. This was one URL less (11) than I was able to submit the previous day (12). I was only able to get this CAPTCHA to pop once during this test.

Does The Request Indexing Feature Provide Instant Indexing?

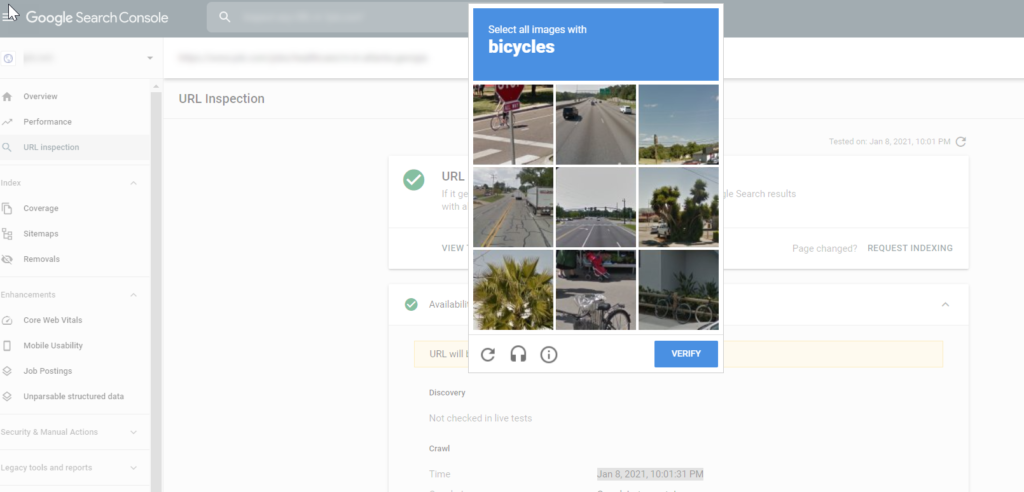

Sadly no. Each time I maxed out the URL submissions I immediately checked the URLs to see if GSC mentioned if they were indexed. In a few tests one or two URLs would get indexed within minutes. Most, however, took 12-24 hours but it was a 100% success rate.

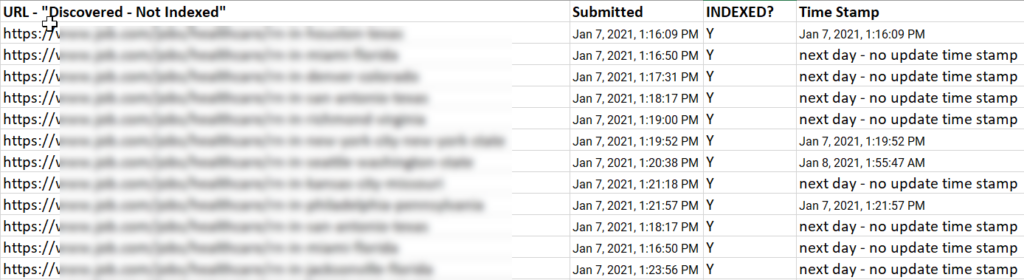

Assuming your content meets Google’s indexing requirements you can assume that your content will get indexed fairly quickly after submitting to Google. Below is a screenshot of my first test in which I was able to submit 12 URLs. 3 URLs were nearly instantly indexed and the other 9 were soon after.

Are GSC Timestamps Reliable?

As mentioned previously, one of my clients is in the process of getting all of their new content indexed. This has forced me to live in our server logs in order to identify (and fix) any issues that are causing Google trouble.

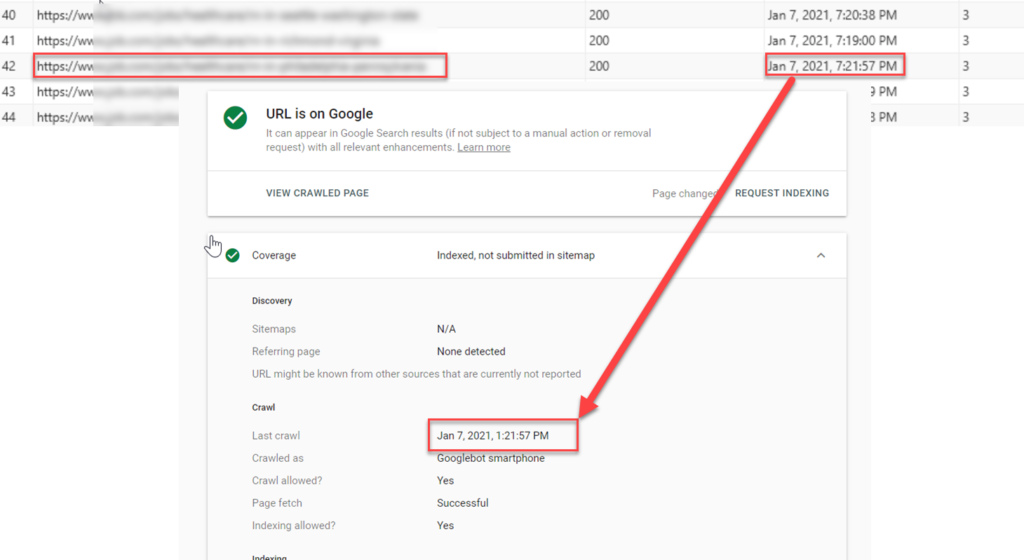

In reviewing the server logs after submitting URLs to Google I was able to match our server discovery dates directly to the timestamp provided by Google in GSC. (notice the hour is different due to my server log configuration, XX:XX:XX PM was still correct

“Inspect URL” Limit? Or Bug?

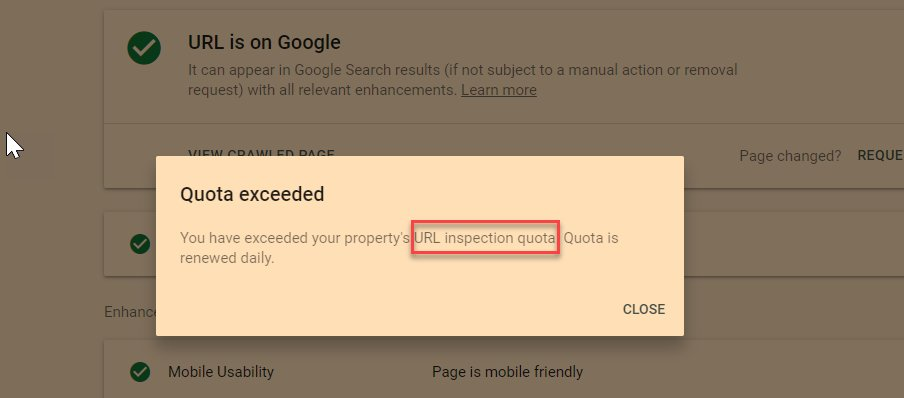

While everything above helps you answer the question of how many pages and the effectiveness of the request indexing feature. However, I wanted to also share an interesting (Bug?) that I ran into. I can’t replicate this issue and it only happened the day after I hit my request indexing limit for the first time.

You will notice that this “quota exceeded” message for URL inspection is different than the one that is provided for exceeding your “request indexing” limit. This was on the domain property level, but moving to the https://www property it allowed me to inspect more URLs but not submit any more URLs via the request indexing feature.